Why simply Accepting Data as Delivered may be a Game-Changer for Customer MDM

Achieving a single, accurate, and holistic view of your customer is the brass ring of customer data management (CDM). Such an aspiration often hits a roadblock when dealing with diverse data sources, particularly those outside your direct control.

Many traditional data integration paradigms advocate for fundamentally strict, blocking validation at the point of data ingestion, ensuring only “perfect” data enters the target system. While this is seemingly ideal, such an approach can be a significant impediment when integrating data from external systems or legacy platforms that cannot be easily cleansed or reformed upstream.

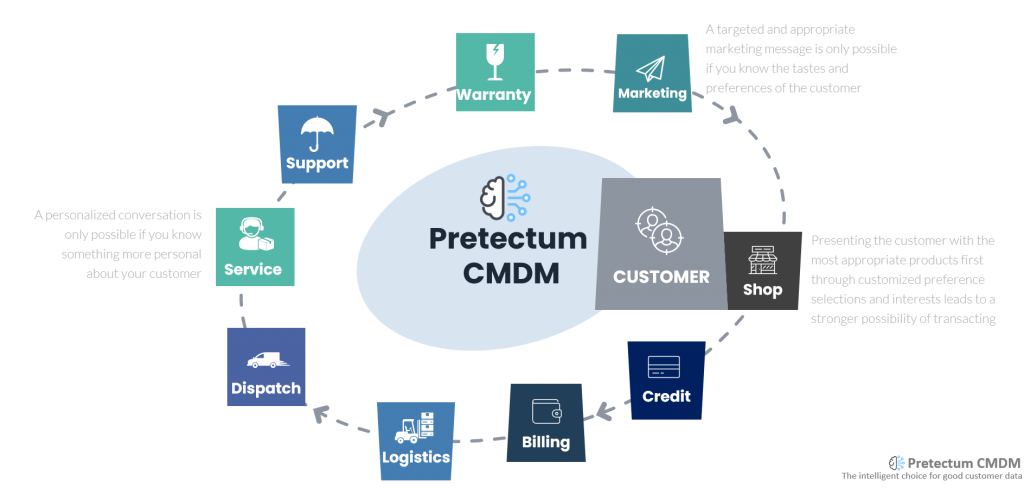

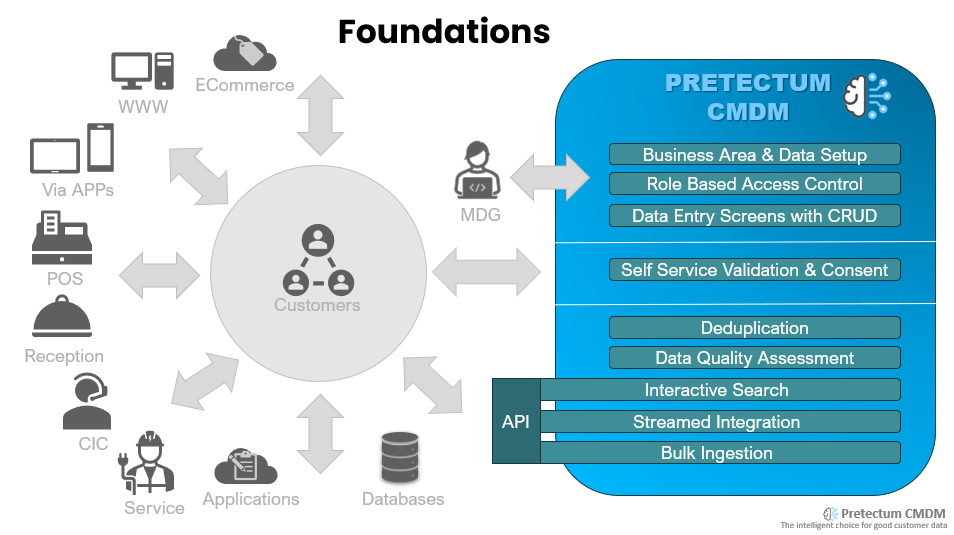

The concept of “Capture, Receive, Update” (CRU), or data that is “Accepted as Delivered,” is able to be championed by solutions like Pretectum Customer MDM. Such capabilities may have a profound benefit for your organization. Instead of outright data rejection, CRU advocates for ingesting data as it arrives, flagging discrepancies for later remediation, and in doing so, fundamentally alters the approach to customer data staging.

You might see CRU refer to the same principles of “Accepted as Delivered” but the acronym might stand for the concept of Centralized, Raw and Unstructured – the only problem with this definition, is that the data may well be structured yet problematic, and may not be centralized.

This shift to CRU either way, has significant implications, especially favoring an ELT (Extract, Load, Transform) over a traditional ETL (Extract, Transform, Load) paradigm which can be quite favourable under certain data management and data governance circumstances.

The Challenge with Imperfect Data from Sources beyond your reach

The sprawling nature of enterprise data means that customer data could reside in dozens of disparate systems; legacy CRM from a recent acquisition; third-party marketing automation platforms; a customer service portal; and even a bespoke sales tracking applications as well as spreadsheets.

Each of these systems might have its own data definitions, formatting eccentricities, and varying levels of data quality. Some might contain outdated information, others might have inconsistent spellings, and many might lack proper data validation at their source.

If you’ve tried to consolidate data from such environments before, then you know that integrating such data into a master data management (MDM) solution often involves a heavy ETL process.

Data would be extracted from the source, meticulously transformed to meet the MDM’s rigid schema and validation rules, and only then loaded into the staging area or master repository. If transformations failed due to data quality issues, the entire ingestion process could halt, or problematic records would be outright rejected, leaving a trail of unintegrated, potentially valuable customer insights.

This approach creates several headaches for integrators and administrators, especially when it comes to migrations:

- Integration Bottlenecks: The transformation layer becomes a choke point, especially with high volumes of data. Unforeseen data anomalies can break data pipelines pr contaminate target systems.

- Data Loss: Rejecting records means losing potential customer touchpoints or historical context, even if only a single attribute is “bad”, yet the whole record fails.

- Upstream Burden: Bifurcation of data streams between the “good” and the “bad” places the burden of perfect data quality on the source systems. That’s not a bad thing necessarily, but there may be very good reasons why that data cannot be corrected at source, many of which might be toed to external, legacy, or simply not designed for rigorous outbound data standards. Requiring them to conform perfectly is often impossible or cost-prohibitive.

- Reduced Agility: Iterating on data integration then becomes a slow, cumbersome, and burdensome process. This may also trigger the need for changes to validation rules or transformations that require re-engineering the entire ETL pipeline.

The CRU Advantage: Embracing Imperfection for Greater Benefit

“Capture, Receive, Update” flips the pipeline script. Instead of pre-emptively blocking problematic data, it says: “Bring it in. Let’s see what we have.“

- Capture: A system that support this approach is designed to efficiently capture data from diverse sources, regardless of its initial quality or adherence to target schema rules. This is where lightweight ETL can still play a role, performing simple, non-blocking transformations (e.g., standardizing case, basic parsing) to make the data more digestible, but crucially, not for strict validation (Pretectum does this using Excel like functions as part of the load definition)

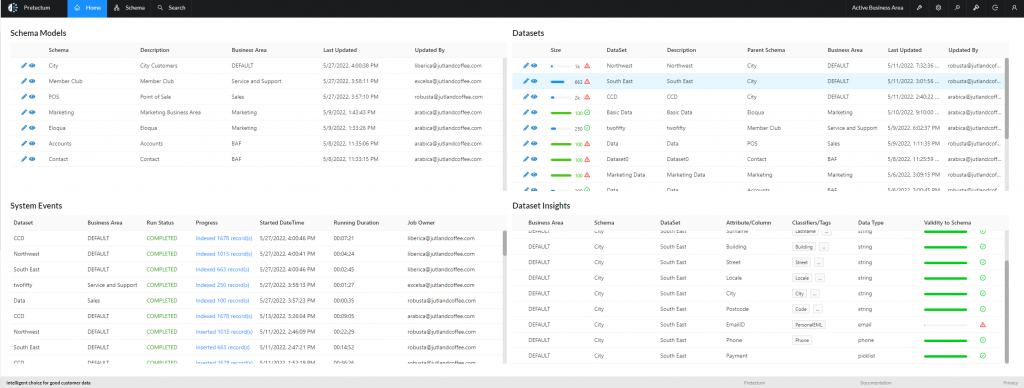

- Receive: All data that is captured is received into the staging area or dataset. No records are outright rejected at this phase due to validation failures, though they will be annotated as carrying problems.

- Update/Flag: Once received, the system applies its defined data validations against the ingested data. However, instead of blocking, it flags individual attributes or records that do not conform. This creates a clear picture of the data’s quality issues within the system.

Why is this a significant benefit for Customer MDM staging?

- Comprehensive Data Ingestion: You get all the data. Even records with quality issues are brought into your staging environment, preventing data loss and ensuring a comprehensive view of customer interactions. This is vital for uncovering hidden relationships or historical patterns that might otherwise be discarded.

- Reduced Integration Friction: The immediate pressure to perfectly cleanse upstream is removed. Integration pipelines become simpler, faster, and more robust as they are not constantly failing due to data quality exceptions. This is particularly beneficial when dealing with third-party data feeds or systems beyond your direct control.

- In-System Data Quality Remediation: By flagging invalid data within the Pretectum MDM platform, the responsibility for data quality shifts from the source system to the solution itself. This allows data stewards to leverage the platform capabilities (like data validation screens, bulk editing, or even AI-assisted suggestions) to cleanse and enrich the data after it’s been ingested.

- Accelerated Time-to-Value: Getting data into the system quickly, even if imperfect, allows for earlier analysis and identification of critical issues. You don’t have to wait for perfect data to begin understanding your customer landscape.

- Auditability and Transparency: Every record is ingested, and its validation status is logged. This provides a complete audit trail of the data’s journey and its quality profile, which is crucial for compliance and governance.

- Flexibility in Schema Evolution: As your business needs change and your schemas evolve, the CRU approach makes it easier to adapt. New validation rules can be applied to existing data, and the system will simply flag non-conformant records, rather than requiring re-ingestion or complex backfill processes.

ELT vs. ETL: The Paradigm Shift

The “Capture, Receive, Update” philosophy inherently aligns with an ELT (Extract, Load, Transform) paradigm, fundamentally differentiating itself from traditional ETL (Extract, Transform, Load).

- Traditional ETL:

- Extract: Data is pulled from the source.

- Transform: Data is then heavily transformed and validated before it is loaded. This often occurs on a separate ETL server or engine. Any issues here can block the load.

- Load: Only the perfectly transformed data is loaded into the target system.

- CRU-driven ELT:

- Extract: Data is pulled from the source.

- Load: Data is loaded directly into the MDM’s staging area (or raw layer within the MDM) with minimal pre-processing. The “lightweight ETL” mentioned earlier happens here, but it’s about making data consumable, not enforcing strict validation.

- Transform: The heavy lifting of transformation, validation, and enrichment (e.g., standardization, deduplication, survivorship, correcting flagged data) happens within the MDM platform itself, leveraging its internal processing power and features.

Implications for ELT:

- Leveraging MDM’s Processing Power: Instead of relying on external ETL tools for complex transformations, ELT leverages the MDM solution’s native capabilities for data quality, matching, survivorship, and validation. This streamlines the architecture.

- Data Lake/Warehouse Synergy: This approach is particularly synergistic with data lake or data warehouse strategies, where raw data is landed first, then transformed within the target environment for various analytical and operational uses.

- Faster Loading Times: By reducing the transformation burden pre-load, data can be ingested into the staging area much faster.

- Single Source of Truth for Transformations: All data quality rules and transformations are managed within the MDM platform, ensuring consistency and centralizing governance.

- Iterative Data Quality Improvement: The ability to load all data and then identify and address quality issues enables an iterative approach to data quality, rather than a “perfect-or-nothing” stance.

Pretectum is a key differentiator as an MDM in its “Accepting Data as Delivered” (CRU) approach, which allows for the ingestion of all customer data—even imperfect or inconsistent data—without immediate rejection. This enables faster data integration, reduces the burden on source systems, and shifts data quality remediation to within the Pretectum platform itself, leveraging an ELT paradigm. Ultimately, Pretectum helps businesses make better decisions, deliver personalized experiences, enhance customer loyalty, and ensure compliance by centralizing and refining customer data into a trusted “Golden Record.”

Contact us to learn more. #LoyaltyIsUpForGrabs